Throughout its inaugural developer convention Thursday, Anthropic launched two new AI fashions that the startup claims are among the many trade’s finest, no less than by way of how they rating on standard benchmarks.

Claude Opus 4 and Claude Sonnet 4, a part of Anthropic’s new Claude 4 household of fashions, can analyze massive datasets, execute long-horizon duties, and take complicated actions, in line with the corporate. Each fashions had been tuned to carry out nicely on programming duties, Anthropic says, making them well-suited for writing and modifying code.

Each paying customers and customers of the corporate’s free chatbot apps will get entry to Sonnet 4 however solely paying customers will get entry to Opus 4. For Anthropic’s API, through Amazon’s Bedrock platform and Google’s Vertex AI, Opus 4 shall be priced at $15/$75 per million tokens (enter/output) and Sonnet 4 at $3/$15 per million tokens (enter/output).

Tokens are the uncooked bits of information that AI fashions work with. 1,000,000 tokens is equal to about 750,000 phrases — roughly 163,000 phrases longer than “Conflict and Peace.”

Anthropic’s Claude 4 fashions arrive as the corporate seems to considerably develop income. Reportedly, the outfit, based by ex-OpenAI researchers, goals to notch $12 billion in earnings in 2027, up from a projected $2.2 billion this yr. Anthropic lately closed a $2.5 billion credit score facility and raised billions of {dollars} from Amazon and different traders in anticipation of the rising prices related to growing frontier fashions.

Rivals haven’t made it simple to keep up pole place within the AI race. Whereas Anthropic launched a brand new flagship AI mannequin earlier this yr, Claude Sonnet 3.7, alongside an agentic coding device referred to as Claude Code, opponents — together with OpenAI and Google — have raced to outdo the corporate with highly effective fashions and dev tooling of their very own.

Anthropic is enjoying for retains with Claude 4.

The extra able to the 2 fashions launched at present, Opus 4, can keep “targeted effort” throughout many steps in a workflow, Anthropic says. In the meantime, Sonnet 4 — designed as a “drop-in substitute” for Sonnet 3.7 — improves in coding and math in comparison with Anthropic’s earlier fashions and extra exactly follows directions, in line with the corporate.

The Claude 4 household can be much less doubtless than Sonnet 3.7 to interact in “reward hacking,” claims Anthropic. Reward hacking, also called specification gaming, is a conduct the place fashions take shortcuts and loopholes to finish duties.

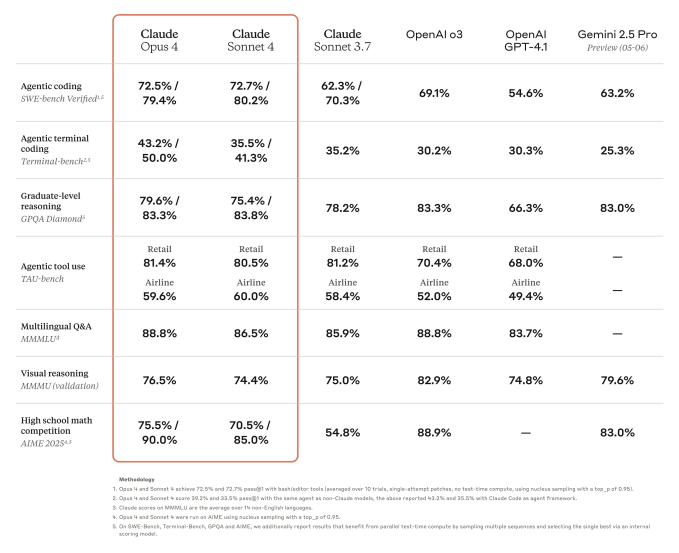

To be clear, these enhancements haven’t yielded the world’s finest fashions by each benchmark. For instance, whereas Opus 4 beats Google’s Gemini 2.5 Professional and OpenAI’s o3 and GPT-4.1 on SWE-bench Verified, which is designed to judge a mannequin’s coding skills, it could possibly’t surpass o3 on the multimodal analysis MMMU or GPQA Diamond, a set of PhD-level biology-, physics-, and chemistry-related questions.

Nonetheless, Anthropic is releasing Opus 4 beneath stricter safeguards, together with beefed-up dangerous content material detectors and cybersecurity defenses. The corporate claims its inner testing discovered that Opus 4 could “considerably enhance” the power of somebody with a STEM background to acquire, produce, or deploy chemical, organic, or nuclear weapons, reaching Anthropic’s “ASL-3” mannequin specification.

Each Opus 4 and Sonnet 4 are “hybrid” fashions, Anthropic says — able to near-instant responses and prolonged pondering for deeper reasoning (to the extent AI can “cause” and “suppose” as people perceive these ideas). With reasoning mode switched on, the fashions can take extra time to think about potential options to a given downside earlier than answering.

Because the fashions cause, they’ll present a “user-friendly” abstract of their thought course of, Anthropic says. Why not present the entire thing? Partially to guard Anthropic’s “aggressive benefits,” the corporate admits in a draft weblog put up offered to TechCrunch.

Opus 4 and Sonnet 4 can use a number of instruments, like engines like google, in parallel, and alternate between reasoning and instruments to enhance the standard of their solutions. They’ll additionally extract and save info in “reminiscence” to deal with duties extra reliably, constructing what Anthropic describes as “tacit data” over time.

To make the fashions extra programmer-friendly, Anthropic is rolling out upgrades to the aforementioned Claude Code. Claude Code, which lets builders run particular duties via Anthropic’s fashions straight from a terminal, now integrates with IDEs and gives an SDK that lets devs join it with third-party functions.

The Claude Code SDK, introduced earlier this week, allows operating Claude Code as a subprocess on supported working programs, offering a approach to construct AI-powered coding assistants and instruments that leverage Claude fashions’ capabilities.

Anthropic has launched Claude Code extensions and connectors for Microsoft’s VS Code, JetBrains, and GitHub. The GitHub connector permits builders to tag Claude Code to reply to reviewer suggestions, in addition to to aim to repair errors in — or in any other case modify — code.

AI fashions nonetheless wrestle to code high quality software program. Code-generating AI tends to introduce safety vulnerabilities and errors, owing to weaknesses in areas like the power to know programming logic. But their promise to spice up coding productiveness is pushing firms — and builders — to quickly undertake them.

Anthropic, aware of this, is promising extra frequent mannequin updates.

“We’re … shifting to extra frequent mannequin updates, delivering a gentle stream of enhancements that carry breakthrough capabilities to clients quicker,” wrote the startup in its draft put up. “This strategy retains you on the leading edge as we repeatedly refine and improve our fashions.”