Anthropic is releasing a brand new frontier AI mannequin known as Claude 3.7 Sonnet, which the corporate designed to “suppose” about questions for so long as customers need it to.

Anthropic calls Claude 3.7 Sonnet the trade’s first “hybrid AI reasoning mannequin,” as a result of it’s a single mannequin that can provide each real-time solutions and extra thought-about, “thought-out” solutions to questions. Customers can select whether or not to activate the AI mannequin’s “reasoning” skills, which immediate Claude 3.7 Sonnet to “suppose” for a brief or lengthy time frame.

The mannequin represents Anthropic’s broader effort to simplify the person expertise round its AI merchandise. Most AI chatbots right this moment have a frightening mannequin picker that forces customers to select from a number of completely different choices that modify in value and functionality. Labs like Anthropic would moderately you not have to consider it — ideally, one mannequin does all of the work.

Claude 3.7 Sonnet is rolling out to all customers and builders on Monday, Anthropic stated, however solely individuals who pay for Anthropic’s premium Claude chatbot plans will get entry to the mannequin’s reasoning options. Free Claude customers will get the usual, non-reasoning model of Claude 3.7 Sonnet, which Anthropic claims outperforms its earlier frontier AI mannequin, Claude 3.5 Sonnet. (Sure, the corporate skipped a quantity.)

Claude 3.7 Sonnet prices $3 per million enter tokens (that means you may enter roughly 750,000 phrases, extra phrases than the complete “Lord of the Rings” sequence, into Claude for $3) and $15 per million output tokens. That makes it dearer than OpenAI’s o3-mini ($1.10 per 1 million enter tokens/$4.40 per 1 million output tokens) and DeepSeek’s R1 (55 cents per 1 million enter tokens/$2.19 per 1 million output tokens), however needless to say o3-mini and R1 are strictly reasoning fashions — not hybrids like Claude 3.7 Sonnet.

Claude 3.7 Sonnet is Anthropic’s first AI mannequin that may “purpose,” a way many AI labs have turned to as conventional strategies of bettering AI efficiency taper off.

Reasoning fashions like o3-mini, R1, Google’s Gemini 2.0 Flash Pondering, and xAI’s Grok 3 (Assume) use extra time and computing energy earlier than answering questions. The fashions break issues down into smaller steps, which tends to enhance the accuracy of the ultimate reply. Reasoning fashions aren’t considering or reasoning like a human would, essentially, however their course of is modeled after deduction.

Finally, Anthropic would really like Claude to determine how lengthy it ought to “suppose” about questions by itself, without having customers to pick controls upfront, Anthropic’s product and analysis lead, Dianne Penn, instructed TechCrunch in an interview.

“Just like how people don’t have two separate brains for questions that may be answered instantly versus people who require thought,” Anthropic wrote in a weblog put up shared with TechCrunch, “we regard reasoning as merely one of many capabilities a frontier mannequin ought to have, to be easily built-in with different capabilities, moderately than one thing to be supplied in a separate mannequin.”

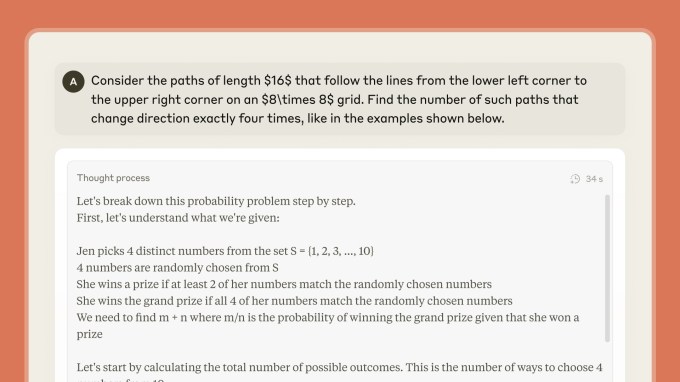

Anthropic says it’s permitting Claude 3.7 Sonnet to point out its inner planning part by way of a “seen scratch pad.” Penn instructed TechCrunch customers will see Claude’s full considering course of for many prompts, however that some parts could also be redacted for belief and security functions.

Anthropic says it optimized Claude’s considering modes for real-world duties, similar to troublesome coding issues or agentic duties. Builders tapping Anthropic’s API can management the “price range” for considering, buying and selling pace, and value for high quality of reply.

On one take a look at to measure real-word coding duties, SWE-Bench, Claude 3.7 Sonnet was 62.3% correct, in comparison with OpenAI’s o3-mini mannequin which scored 49.3%. On one other take a look at to measure an AI mannequin’s capacity to work together with simulated customers and exterior APIs in a retail setting, TAU-Bench, Claude 3.7 Sonnet scored 81.2%, in comparison with OpenAI’s o1 mannequin which scored 73.5%.

Anthropic additionally says Claude 3.7 Sonnet will refuse to reply questions much less typically than its earlier fashions, claiming the mannequin is able to making extra nuanced distinctions between dangerous and benign prompts. Anthropic says it lowered pointless refusals by 45% in comparison with Claude 3.5 Sonnet. This comes at a time when another AI labs are rethinking their strategy to limiting their AI chatbot’s solutions.

Along with Claude 3.7 Sonnet, Anthropic can be releasing an agentic coding device known as Claude Code. Launching as a analysis preview, the device lets builders run particular duties by way of Claude instantly from their terminal.

In a demo, Anthropic staff confirmed how Claude Code can analyze a coding venture with a easy command similar to, “Clarify this venture construction.” Utilizing plain English within the command line, a developer can modify a codebase. Claude Code will describe its edits because it makes modifications, and even take a look at a venture for errors or push it to a GitHub repository.

Claude Code will initially be out there to a restricted variety of customers on a “first come, first serve” foundation, an Anthropic spokesperson instructed TechCrunch.

Anthropic is releasing Claude 3.7 Sonnet at a time when AI labs are transport new AI fashions at a breakneck tempo. Anthropic has traditionally taken a extra methodical, safety-focused strategy. However this time, the corporate’s seeking to lead the pack.

For the way lengthy, although, is the query. OpenAI could also be near releasing a hybrid AI mannequin of its personal; the corporate’s CEO, Sam Altman, has stated it’ll arrive in “months.”